Tuesday March 3rd, 2026

I'm spending a lot of time today reading up on Model Context Protocol and "best practices" when using MCP (which, gotta say, is different from the MCP acronym I grew up with). Which... this conversation on Metafilter. caviar2d2 opined:

Having developed software for 30 years, if I look back, most of the software being developed in the US today has a negative net impact on society and people.

flabdablet observed that writing code has not been the bottleneck:

Surely all it will take to clean those Augean stables is devising some way to scale today's excretion rate to at least 10x.

on which Sparx riffed:

I love this!

"Observe! I have invented a hose of such intense pressure that it will clean the Augean Stables!"

"Amazing. Those stables are disgusting. Wait - don't you think you should use water?"

"I need all the water to keep this baby cool."

"So what are you using?"

"Just some other stuff I found. The stables are full of it."

Since there's currently an orchestrated push to destroy anonymity on the Internet: Politico: Resist ‘dangerous and socially unacceptable’ age checks for social media, scientists warn

The warning comes as countries around the world move to bar children from social media, which requires some way of checking users’ ages to decide if they can access online services. In an open letter, 371 security and privacy academics across 29 countries said the technologies being rolled out are not effective and carry significant risks.

California Assembly Bill 1043: AB-1043 Age verification signals: software applications and online services. apparently makes it illegal to configure an operating system without confirming the user's age, similarly for Colorado Senate Bill SB 26-051: AGE ATTESTATION ON COMPUTING DEVICES

Taylor Lorenz in The Guardian: The world wants to ban children from social media, but there will be grave consequences for us all, in response to the toot linking to that Alan @metaphase@toot.community asked

@taylorlorenz Who is paying for the lobbyists for this seemingly worldwide campaign for the legislation to install identity surveillance everywhere "for the children"?

And why, even in blue states, are the politicians always so eager to enable tools so easily abused by authoritarian, fascist governments

Monday March 2nd, 2026

Uncle Duke @UncleDuke1969@universeodon.com

Don’t put ants in your ears

Or bees in your anus

Lick a porcupine once

You’ll find out what pain is

And there’s just one last thing

One final reminder

Please don’t put ground-up wasp nests in your vagina

Today's Timdle putting the beginning of the Discovery Channel up against Anna Wintour's Vogue era, *and* the Glee pilot episode against the world population hitting 7B, feels unfair...

On Saturday evening, Charlene and I were sitting out on our front patio eating dinner and watching drivers run the Mission & Mountain View stop signs (spending some more time gathering video of this in order to make a montage to post to Facebook and NextDoor titled "those fucking bicyclists" is a fantasy project).

The social media comments on the news of two recent killings of cyclists on rural roads around Petaluma are filled with "yeah, that road isn't safe for bicycles, I don't know what they were doing there".

We hear that the city has over 200 requests for traffic calming and safety improvements in their barely funded safe streets programs.

But here we have an example of where a metropolitan region of 1.5 million people has decided that killing people for convenience is not acceptable.

Helsinki just went a full year without a single traffic death.

Sunday March 1st, 2026

The worst part about setting up filters to mute armchair pundits spewing about an assassinated foreign leader is trying to capture all of the different spellings that people shamelessly trying to acquire eyeballs and followers are slamming out there.

Late to the party, I know, everyone has been suggesting this, but if you haven't seen it yet carve out 22 minutes and watch "A Friend of Dorothy". And I will pre-buy tickets to whatever film Lee Knight makes next.

So we prune bushes, do we use the name of other dehydrated stone fruit for the act of lopping off bits of other things?

Saturday February 28th, 2026

The writers of 2026 are having to go to particularly ridiculous lengths to get headlines. Lockout Supplements Issues Voluntary Nationwide Recall of Boner Bears Chocolate Syrup Due to Undeclared Sildenafil

Via.

We need a better phrase than "AI psychosis" or "AI addiction" for what this is.

What we're seeing with people becoming emptionally and intellectually dependent on computer processes, as a direct consequence of the way both mass and social media have for generations been designed to exploit parasocial fascinations and protaganism.

The entire Internet of social interaction, fandom, and fantasy, was slurped up in service of training these language models in how to act human. With that kind of pedigree, you know damn well how competent they will be at that, in a variety of fairytale ways. The ultimate "Choose Your Own Adventure" storyline.

This is a problem well beyond ethical concerns like intellectual property rights. What's been documented so far, is the December of a global mental health pandemic that may well make the Covid isolation blues seem mild. But for me, the descriptors of "psychosis" and "addiction" don't really cut it.

Being Left Behind Enjoyer @thomasfuchs@hachyderm.io

Just thought about something that bugged me about that Apple “Crush” iPad ad (from two years ago).

The whole image it conveys is that personal computers went from bicycles for the mind to trash compactors for your dreams is just so aptly describing the modern tech industry.

Carry on.

datarama @datarama@hachyderm.io

I apparently live in a world where a totally normal thing that happens is: Linux filesystem maintainer declares that his AI agent is conscious and also a girl btw, and then the AI agent comes out as a trans lesbian after flirting with someone on IRC. Linux filesystem maintainer throws a fit.

I... I think I'm too old for this.

The Register: Bcachefs creator insists his custom LLM is female and 'fully conscious'

It's not chatbot psychosis, it's 'math and engineering and neuroscience'

As an ethical AI user, I begin each session by asking the chatbot to give a stolen data acknowledgement. It is an important first step toward justice.

CNN: A Chinese official’s use of ChatGPT accidentally revealed a global intimidation operation

The Chinese law enforcement official used ChatGPT like a diary to document the alleged covert campaign of suppression, OpenAI said. In one instance, Chinese operators allegedly disguised themselves as US immigration officials to warn a US-based Chinese dissident that their public statements had supposedly broken the law, according to the ChatGPT user. In another case, they describe an effort to use forged documents from a US county court to try to get a Chinese dissident’s social media account taken down.

Windy city @pheonix@hachyderm.io

Before you buy that nice hoodie online, ask yourself, "Am I willing to delete one extra email every day for the rest of my life?"

Friday February 27th, 2026

Yeah. New rule. I check your website, I see any hint of "AI assistant" or "AI" used in support, I nope the fuck out of your services or products.

Holy shit this is bad.

Friend has Hostinger hosting. We've been trying to get their "Kodee" LLM assistant to fix things.

If your business advertises an "AI" assistant, we can assume that you are actively customer hostile and want to waste customer time rather than fixing anything. Blanket rule.

Thursday February 26th, 2026

Boulder Cast: A Complete Failure of Winter Across the West — And What It Means for the Rest of 2026

A stubborn North American dipole pattern locked into place: a deep, frigid trough dominating the eastern U.S. while a warm, bloated ridge camped over the West or just offshore. La Niña helped tilt the scales, but the dipole did the heavy lifting. The result was astonishing, historic, and frankly unsettling. Entire western states logged their warmest winter on record. Meanwhile, the East endured weeks‑long cold snaps not seen in decades, with snow and ice reaching the Gulf Coast and Deep South. Coastal North Carolina has seen more snow than Denver. Parts of Florida have out‑snowed Salt Lake City. That’s how upside‑down this season has been.</blockquot>

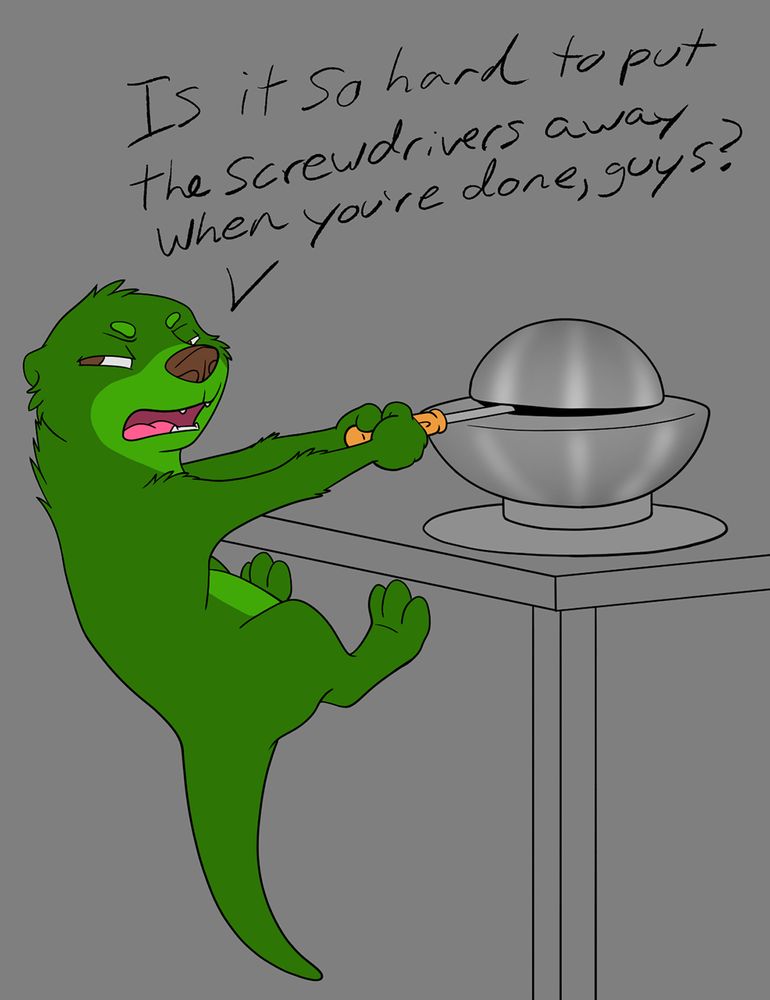

I don't know why this particular image makes me giggle like it does, but Pickl es! @misterpickleman.bsky.social:

Post a meme made by you.

One of the things about being conscious of automobile statistics is just how hard it is to do things like find what to normalize against, and understand error and biases and all of that, so the caveat to this is that the Cybertruck's sales numbers are so abysmally low that it's really hard to extrapolate from 5 reported fire fatalities, one of which was a suicide, but: Fuelarc: It’s Official: the Cybertruck is More Explosive than the Ford Pinto.

I'm not sure I can actually follow on BlueSky because frankly I have enough of "holy shit the world is fucked up" right now, especially since his beat is Southern California, and it's not like I need regular reminders that the LAPD and LACSD are criminal enterprises, but...

LAPD Quietly Admits At Least One Of Its Officers In A “Law Enforcement Gang”

Disabled on Paper, Skydiving in Practice: LAPD Officer Charged With Disability Fraud

The Respondents did attach a document to their Response purporting to show minor convictions for marijuana possession in 2009. The Petitioner was four years old in 2009, and the Respondent indicated that the document was supplied by ICE and likely presumed to relate to the Petitioner because the individual in those records had the same name, despite the differences in birthdate, birthplace, parents’ names, and immigration status. This sloppiness further validates the Court’s concerns about the procedures utilized by the Respondents depriving people present in the United States of their liberty.

As Gavin Newsom continues his run for Trump's position, against people who are actually running for, you know, President, it's good to be reminded of who the slimeball really is:

Gawker: Remember When... 38-Year-Old Gavin Newsom Dated a Republican Teen

He brought her to the symphony after apparently disguising her age on Myspace

By way of Just Some Guy @justsomeguy11.bsky.social who noted:

The guy that fucked a 19 year old when he was 38 and owns a wine bar where the tables spell out the word "sex" is a weird terf? Color me surprised!

WSJ on MSN: Americans are leaving the US in record numbers

Last year the U.S. experienced something that hasn’t definitively occurred since the Great Depression: More people moved out than moved in. The Trump administration has hailed the exodus—negative net migration—as the fulfillment of its promise to ramp up deportations and restrict new visas. Beneath the stormy optics of that immigration crackdown, however, lies a less-noticed reversal: America’s own citizens are leaving in record numbers, replanting themselves and their families in lands they find more affordable and safe.

Direct WSJ link, both via.

If the bullshit coming out of Kansas right now isn't making you go "holy fuck", the idea that federal prison officials are blatantly disregarding judicial orders is...

Law Dork: Breaking: Trans inmate's lawyers claim "egregious retaliation" in violation of court order

Trans woman involved in a lawsuit challenging anti-trans policies says federal prison official told her on Feb. 22: “I don’t give a fuck what that judge says, I do what I want.”

Via.

This MeFi comment from caviar2d2. So much good in it, but:

So with AI entering the picture the worldview of your average programmer goes from "I don't love this company and the business part of it, but at least I get to solve fun puzzles" to "oh, I don't get to solve the puzzles anymore, what the hell is this all about" and a sudden reckoning with what a moral stain it is to be writing code...

and:

My wife put it really well over drinks last week:

"AI turns idiots in the workplace from a distraction into a menace." Every now seems as smart as everyone else unless you lock them in a Faraday cage and force them to explain themselves. And the smart people are getting dumber every day.

Wednesday February 25th, 2026

Voice teacher suggested we try Moody's Mood For Love, the sheet music version is Amy Winehouse's version, and... holy crap do the producer and I have ... different visions.

Hope someone else follows the same sheet music structure so I don't have to listen to that percussion over and over...

Saturday Morning Breakfast Cereal from June 20, 2017 nails the ChatGPT girlfriend experience.

You were sent here because your contribution triggered our automated and/or manual AI Slop defenses. Specifically, a human maintainer or senior engineer looked at your submission, experienced a profound existential sigh, initiated an immediate socket closure on your contribution, and pasted this URI.

Via.

Omelas Council: "We have created a beautiful society that requires only that a single child suffers."

Techbros: "Have you tried using more?"

Ya know when you see something that's definitely there to troll the older generation, like Tide Pod challenge or whatever? This is so fucking stupid I can't believe it's actually real, on the other hand it is the 2020s, so "so fucking stupid" is completely believable. GQ: What is Bonesmashing? Inside the Extreme Looksmaxxer Technique

As looksmaxxing enters our lexicon, the practice of bonesmashing—tapping your face with a hammer to shape your bone structure—is trailing close behind.

"Retrophrenology" was supposed to be a joke. Although this also probably isn't de- douchebagifying the incels, so, yeah, doesn't work in practice either.

Should probably make a note of this, 'cause it's everywhere right now: Gizmodo: Meta Exec Learns the Hard Way That AI Can Just Delete Your Stuff

One small trick to get you to inbox zero.

TechCrunch: A Meta AI security researcher said an OpenClaw agent ran amok on her inbox.

The X/Twitter post/thread that describes the thing, there are alt links in the Gizmodo text.

The saddest thing about this is the anthropomorphization in the follow-up screencap, with any sort of sense that the LLM has "learned" anything.

A recent study by Triton Digital says 80% of those surveyed consume podcasts both in audio and video. But only 7% only consume the video.

I am surprised that the video consumption is that high.

Daniel P. Huffman @pinakographos@mapstodon.space has a warning to Adobe InDesign users to double-check all of the AI generated content, including image alt text that you might not have known was even there.

Hot on the heels of Goldman Sachs announcing an AI-free index fund (Pivot to AI on the topic), AI Added ‘Basically Zero’ to US Economic Growth Last Year, Goldman Sachs Says

Briggs’ colleague, Goldman Sachs Chief Economist Jan Hatzius, said in an interview with the Atlantic Council that AI investment spending has had “basically zero” contribution to the U.S. GDP growth in 2025.

“We don’t actually view AI investment as strongly growth positive,” said Hatzius. “I think there’s a lot of misreporting, actually, of the impact AI investment had on U.S. GDP growth in 2025, and it’s much smaller than is often perceived.”

Some of this is that the value of the excess manufacturing accruing to Taiwan and Korea.

Tuesday February 24th, 2026

If Microsoft creates a modern Aibo, does that mean CoPilot is your dog?

(Ref: "CoPilot is my Jesus" from flabdablet https://www.metafilter.com/212...ain-Has-Left-the-Station#8817052 )

I wondered how the NYT could edit Trump's comments to make him sound coherent and like he was speaking in complete sentences, and yet record every "uhm" and "ah" in a quote pulled from the middle of a 90 minute AOC free-form Q&A. Spin Class Cass Study: I Caught POLITICO and the New York Times Laundering Pink Slime "News"

How the sexist backlash to AOC and Gretchen Whitmer at the Munich Security Conference led me to a scammy "local news" outlet pushing a coordinated right-wing narrative

Monday February 23rd, 2026

OH: "In biz AIs don't have to be any smarter, or able to appear smarter, than the folks making the purchasing decisions"

and: "an ai can appear to be an expert, to the person making hiring decisions."

New style manual for "AI optimization" just dropped: Search Engine Land: 44% of ChatGPT citations come from the first third of content: Study

Growth Memo: The science of how AI pays attention

I analyzed 1.2 million search results to find out exactly how AI reads. The verdict? It’s a busy editor, not a patient student.

There's just so many of these floating across my world right now that it's hard to link to specific instances, and easier to just observe: CHOAM Nomsky @samthielman.com

Every news story is now “State Senator Randy Pervert (R-Springfield) was indicted on 8 counts of lewd behavior with a child under 12 and has been put on paid leave from his two jobs as Border Patrol liaison and youth pastor at First Presbyterian (PCA) where parishioners say they were ‘blindsided.’”

Anderson Jesus Urquilla-Ramos, Petitioner, v. Donald J. Trump, et al. Civil Action No. 2:26-cv-00066 (PDF)

Antiseptic judicial rhetoric cannot do justice to what is happening. Across the interior of the United States, agents of the federal government—masked, anonymous, armed with military weapons, operating from unmarked vehicles, acting without warrants of any kind—are seizing persons for civil immigration violations and imprisoning them without any semblance of due process. The systematic character of this practice and its deliberate elimination of every structural feature that distinguishes constitutional authority from raw force place it beyond the reach of ordinary legal description. It is an assault on the constitutional order. It is what the Fourth Amendment was written to prevent. It is what the Due Process Clause of the Fifth Amendment forbids.

geekysteven @geekysteven@beige.party

A common theme in science fiction is that if you're in space, don't trust a corporation. And Earth is in space

404 Media: Meta Director of AI Safety Allows AI Agent to Accidentally Delete Her Inbox

Summer Yue, the director of alignment at Meta Superintelligence Labs, a part of the company that is working on a hypothetical AI system that exceeds human intelligence, posted about the incident on X last night. Yue was experimenting with OpenClaw, an viral AI agent that can be empowered to perform certain tasks with little human supervision. OpenAI hired the creator of OpenClaw last week.

Tara Calishain @researchbuzz.bsky.social

Stories like this hit different now

With a link to Peter Mandelson offers to help government with Brexit negotiations from June 2017:

Peter Mandelson has offered to serve on any cross-party commission to advise on Brexit – and backed former prime minister Sir John Major to chair the group.

While quoting Kyle Griffin @kylegriffin1.bsky.social

Breaking Sky News:

Peter Mandelson has been arrested on suspicion of misconduct in public office.

He was led out of his London home by police officers this afternoon.

Mandelson has faced allegations that he leaked market-sensitive information from Downing Street to Jeffrey Epstein.

Which links to Mandelson arrested on suspicion of misconduct in public office:

The first set of files related to Peter Mandelson's appointment as the UK's ambassador to the US will be published in early March, it has been announced in the House of Commons.

A few interesting tidbits in Bloomberg CityLab: An Insurance Expert Appraises the Safety Record of Self-Driving Cars

Are autonomous vehicles operated by companies like Waymo and Tesla making the streets safer? The insurance industry’s research arm is watching closely.

Some good observations about comparing contexts, and the difficulty of getting and analyzing good data. Via.

Two excellent dystopian fiction podcasts that are having trouble delivering their last few episodes, Life with Althaar, and Metropolis, have me wondering if part of why they're stalled is just that the predicted current events so well that they're struggling to satirize the present.

Sunday February 22nd, 2026

https://doi.org/10.7326/ANNALS-25-00997

Via Chise @sailorrooscout.bsky.social

WELL NOW, would you look at that? A massive, 24-YEAR-LONG study of MORE THAN 1.2 MILLION people in Denmark found NO LINK between aluminum in childhood vaccines and autism, asthma, OR chronic disorders.

Aluminum is an ADJUVANT.

It ENHANCES your immune response to a vaccine.

That’s it.

The US envoy left out that Iran currently has no access to its material, no machines to enrich it, and no weapons program to use it for any operational purpose.

And, quoting Witkoff's Fox News appearance:

“They’re probably a week away from having industrial-grade bomb-making material. And that’s really dangerous. So they can’t have that,” Witkoff said on Fox News’s My View with Lara Trump, clearly wanting to highlight the severity of the potential future nuclear issues should Iran rebuild all the other elements of its nuclear program, which were bombed in June 2025.

They must really be afraid of those Epstein materials.

Via.